Terraform은 클라우드 및 온프레미스 리소스를 안전하고 효율적으로 빌드, 변경 및 버전 관리할 수 있는 인프라 코드 도구입니다.

Terraform은 애플리케이션 프로그래밍 인터페이스(API)를 통해 클라우드 플랫폼 및 기타 서비스에서 리소스를 생성하고 관리합니다. 공급자는 Terraform이 액세스 가능한 API를 통해 사실상 모든 플랫폼이나 서비스와 함께 작업할 수 있도록 합니다.

테라폼을 통해 인프라를 구축하는 과정을 자동화할 수 있습니다.

테라폼으로 eks까지 구축하는 과정은 다음과 같습니다.

1. Terraform 설치

2. AWS 인증

3. VPC 설정

4. EC2 설정

5. SSM 설정

6. EKS 설정

1. Terraform 설치

먼저 테라폼부터 설치해보겠습니다.

AMD64(64비트)를 다운받고, 이 파일을 압축 풀고 ‘terraform’ 파일명으로 C:\terraform 경로로 설정해줍니다.

설정>시스템>정보>고급 시스템 설정에 들어가서

또 고급>환경 변수>’Path’>편집을 누릅니다.

그리고 새로 만들기하여 ‘C:\terraform’을 지정합니다.

그리고 터미널로 가서 terraform 동작을 확인합니다.

*부가 설정

VScode에 들어가서 ‘openInIntergratedTerminal’커맨드 단축키를 지정하여 현재 경로를 새 터미널로 열 수 있게 합니다.

그리고 위 두 개를 설치해주었습니다.

그리고 위처럼 테라폼이 잘 인식되는지 확인해줍니다.

2. AWS 인증

다음은 AWS 인증입니다.

AWS 인증 방법 고민 - github에 테라폼 파일을 커밋할 때, 보안 이슈가 발생할 것 같습니다.

****** 매우 중요*********

- AWS CLI configure 사용 - .aws파일에 key값이 저장됩니다.

- 환경 변수 설정 - 세션이 종료되면 다시 인증해야 합니다.(매번 key를 입력)

- AWS SSO 사용 - 일정 시간이 지나면 다시 인증해야 합니다.(sso login) AWS SSO 사용자 세션은 1시간에서 12시간 사이로 설정할 수 있습니다.

- Terraform 파일 provider 블록에 직접 설정 - 이 방법은 보안 상 위험해 추천X

1번은 디렉토리 위치가 다르면 문제가 되진 않을 것 같고,

2번은 세션 종료 되면 다시 인증해야 되는 번거로움이 있을 것 같고,

3번도 세션 종료 되면 sso 로그인을 다시 해줘야 하고,

4번은 한다면 .gitignore를 잘 설정해줘야 합니다.

저는 AWS CLI configure 사용하겠습니다.

먼저 AWS IAM에 들어가서 terraform을 관리할 그룹을 생성합니다.

그룹의 권한은 AdministratorAccess를 주었습니다.

그 다음에는 사용자를 생성하여 그룹에 추가해줍니다.

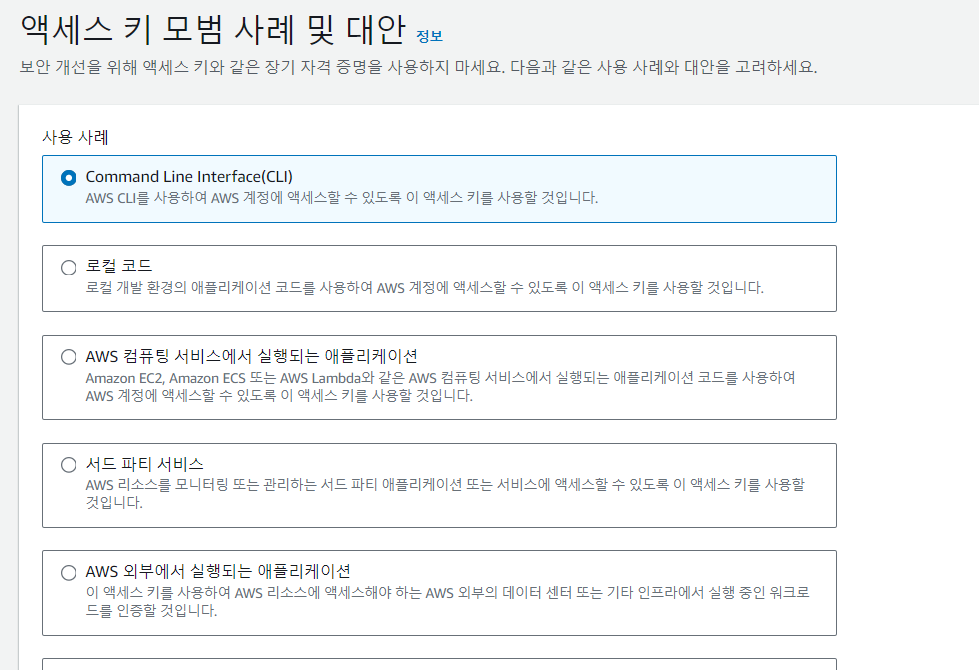

그리고 사용자의 액세스 키를 만듭니다.

액세스 키를 생성했으면 AWS configure을 해줘야 AWS 인증이 되므로

AWS CLI를 설치해야 합니다.

그런 다음 aws configure을 실행해 access key 정보를 넣어줍니다.

그러면 AWS 인증은 완료가 되었습니다.

3. VPC 설정

먼저 간단한 terraform init, plan, apply를 해보겠습니다.

00_main.tf 파일 생성

#########################################################################################################

## Configure the AWS Provider

#########################################################################################################

provider "aws" {

profile = var.terraform_aws_profile

region = var.aws_region

default_tags {

tags = {

managed_by = "terraform"

}

}

}

variables.tf 파일 생성

#########################################################################################################

#variable "aws_access_key" {

# type = string

# description = "AWS Access Key"

#}

#

#variable "aws_secret_key" {

# type = string

# description = "AWS Secret Key"

#}

#

#variable "aws_session_token" {

# type = string

# description = "AWS Session Token"

#}

variable "pem_location" {

type = string

default = "."

}

variable "terraform_aws_profile" {

type = string

default = "oliveyoung"

}

variable "terraform_workspace-name" {

type = string

default = "oliveyoung"

}

variable "aws_region" {

type = string

default = "ap-northeast-2"

}

#########################################################################################################

## EKS Variable

#########################################################################################################

variable "cluster-name" {

description = "AWS kubernetes cluster name"

default = "oliveyoung-cluster"

}

variable "cluster-version" {

description = "AWS EKS supported Cluster Version to current use"

default = "1.27"

}

01_vpc.tf 파일 생성

(VPC, Subnet, Internet gateway, NAT gateway, Routing Table, 탄력적 IP, 보안 그룹) 리소스들 생성

#########################################################################################################

## Create a VPC

#########################################################################################################

resource "aws_vpc" "oliveyoung-vpc-ap-northeast-2" { # terraform에서 구분하기 위한 이름

cidr_block = "10.0.0.0/16"

enable_dns_support = true

enable_dns_hostnames = true

tags = {

Name = "oliveyoung-vpc-ap-northeast-2" # AWS 콘솔에서 나타나는 이름

}

}

#########################################################################################################

## Create Public & Private Subnet

#########################################################################################################

resource "aws_subnet" "oliveyoung-2a-pub-01" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

cidr_block = "10.0.0.0/24"

availability_zone = "ap-northeast-2a"

map_public_ip_on_launch = true

tags = {

Name = "oliveyoung-2a-pub-01"

"kubernetes.io/cluster/${var.cluster-name}" = "shared"

"kubernetes.io/role/elb" = "1"

}

}

resource "aws_subnet" "oliveyoung-2a-pri-eks-01" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

cidr_block = "10.0.1.0/24"

availability_zone = "ap-northeast-2a"

tags = {

Name = "oliveyoung-2a-pri-eks-01"

"kubernetes.io/cluster/${var.cluster-name}" = "shared"

"kubernetes.io/role/internal-elb" = "1"

}

}

resource "aws_subnet" "oliveyoung-2a-pri-db-01" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

cidr_block = "10.0.2.0/24"

availability_zone = "ap-northeast-2a"

tags = {

Name = "oliveyoung-2a-pri-db-01"

"kubernetes.io/cluster/${var.cluster-name}" = "shared"

"kubernetes.io/role/internal-elb" = "1"

}

}

resource "aws_subnet" "oliveyoung-2c-pub-01" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

cidr_block = "10.0.100.0/24"

availability_zone = "ap-northeast-2c"

tags = {

Name = "oliveyoung-2c-pub-01"

"kubernetes.io/cluster/${var.cluster-name}" = "shared"

"kubernetes.io/role/elb" = "1"

}

}

resource "aws_subnet" "oliveyoung-2c-pri-eks-01" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

cidr_block = "10.0.101.0/24"

availability_zone = "ap-northeast-2c"

tags = {

Name = "oliveyoung-2c-pri-eks-01"

"kubernetes.io/cluster/${var.cluster-name}" = "shared"

"kubernetes.io/role/internal-elb" = "1"

}

}

resource "aws_subnet" "oliveyoung-2c-pri-db-01" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

cidr_block = "10.0.102.0/24"

availability_zone = "ap-northeast-2c"

tags = {

Name = "oliveyoung-2c-pri-db-01"

"kubernetes.io/cluster/${var.cluster-name}" = "shared"

"kubernetes.io/role/internal-elb" = "1"

}

}

#########################################################################################################

## Create Internet gateway & Nat gateway

#########################################################################################################

resource "aws_internet_gateway" "oliveyoung-igw-ap" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

tags = {

Name = "oliveyoung-igw-ap"

}

}

resource "aws_eip" "nat-eip-01" {

domain = "vpc"

lifecycle {

create_before_destroy = true

}

}

resource "aws_nat_gateway" "oliveyoung-natgw-ap-01" {

subnet_id = aws_subnet.oliveyoung-2a-pub-01.id

allocation_id = aws_eip.nat-eip-01.id

tags = {

Name = "oliveyoung-natgw-ap-01"

}

}

resource "aws_eip" "nat-eip-02" {

domain = "vpc"

lifecycle {

create_before_destroy = true

}

}

resource "aws_nat_gateway" "oliveyoung-natgw-ap-02" {

subnet_id = aws_subnet.oliveyoung-2c-pub-01.id

allocation_id = aws_eip.nat-eip-02.id

tags = {

Name = "oliveyoung-natgw-ap-02"

}

}

#########################################################################################################

## Create Route Table & Route

#########################################################################################################

resource "aws_route_table" "oliveyoung-2a-pub-rtb" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.oliveyoung-igw-ap.id

}

tags = {

Name = "oliveyoung-2a-pub-rtb"

}

}

resource "aws_route_table" "oliveyoung-2c-pub-rtb" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.oliveyoung-igw-ap.id

}

tags = {

Name = "oliveyoung-2c-pub-rtb"

}

}

resource "aws_route_table_association" "oliveyoung-2a-pub-rtb-assoc1" {

route_table_id = aws_route_table.oliveyoung-2a-pub-rtb.id

subnet_id = aws_subnet.oliveyoung-2a-pub-01.id

}

resource "aws_route_table_association" "oliveyoung-2c-pub-rtb-assoc2" {

route_table_id = aws_route_table.oliveyoung-2c-pub-rtb.id

subnet_id = aws_subnet.oliveyoung-2c-pub-01.id

}

resource "aws_route_table" "oliveyoung-2a-pri-rtb-01" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

route {

cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.oliveyoung-natgw-ap-01.id

}

tags = {

Name = "oliveyoung-2a-pri-rtb-01"

}

}

resource "aws_route_table" "oliveyoung-2c-pri-rtb-01" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

route {

cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.oliveyoung-natgw-ap-02.id

}

tags = {

Name = "oliveyoung-2c-pri-rtb-01"

}

}

resource "aws_route_table_association" "oliveyoung-2a-pri-rtb-01-assoc1" {

route_table_id = aws_route_table.oliveyoung-2a-pri-rtb-01.id

subnet_id = aws_subnet.oliveyoung-2a-pri-eks-01.id

}

resource "aws_route_table_association" "oliveyoung-2a-pri-rtb-01-assoc2" {

route_table_id = aws_route_table.oliveyoung-2a-pri-rtb-01.id

subnet_id = aws_subnet.oliveyoung-2a-pri-db-01.id

}

resource "aws_route_table_association" "oliveyoung-2c-pri-rtb-01-assoc1" {

route_table_id = aws_route_table.oliveyoung-2c-pri-rtb-01.id

subnet_id = aws_subnet.oliveyoung-2c-pri-eks-01.id

}

resource "aws_route_table_association" "oliveyoung-2c-pri-rtb-01-assoc2" {

route_table_id = aws_route_table.oliveyoung-2c-pri-rtb-01.id

subnet_id = aws_subnet.oliveyoung-2c-pri-db-01.id

}

#########################################################################################################

## Create Security Group

#########################################################################################################

resource "aws_security_group" "oliveyoung-bastion-sg" {

name = "oliveyoung-bastion-sg"

description = "oliveyoung-bastion-sg"

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

}

resource "aws_security_group_rule" "allow-all-ports-egress-01" {

from_port = 0

protocol = "-1"

security_group_id = aws_security_group.oliveyoung-bastion-sg.id

to_port = 0

type = "egress"

description = "all ports"

cidr_blocks = ["0.0.0.0/0"]

}

resource "aws_security_group" "oliveyoung-ssm-endpoint-sg" {

name = "oliveyoung-ssm-endpoint-sg"

description = "oliveyoung-ssm-endpoint-sg"

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

}

resource "aws_security_group_rule" "allow-https" {

from_port = 443

protocol = "tcp"

security_group_id = aws_security_group.oliveyoung-ssm-endpoint-sg.id

to_port = 443

type = "ingress"

description = "https"

cidr_blocks = ["0.0.0.0/0"]

}

resource "aws_security_group_rule" "allow-all-ports-egress-02" {

from_port = 0

protocol = "-1"

security_group_id = aws_security_group.oliveyoung-ssm-endpoint-sg.id

to_port = 0

type = "egress"

description = "all ports"

cidr_blocks = ["0.0.0.0/0"]

}

‘terraform init’을 실행하면 다음과 같습니다.

‘terraform plan’을 실행하면 다음과 같습니다.

‘terraform apply’을 실행하면 VPC관련 리소스들이 다 생성됩니다.

ex) 서브넷

4. EC2 설정

#########################################################################################################

## Create ec2 instance for Bastion

#########################################################################################################

resource "aws_iam_instance_profile" "oliveyoung-bastion-ssm-profile" {

name = "oliveyoung-bastion-ssm-profile"

role = aws_iam_role.ssm-role-01.name

}

resource "aws_instance" "oliveyoung-bastion" {

ami = "ami-008d41dbe16db6778"

instance_type = "t3.medium"

subnet_id = aws_subnet.oliveyoung-2a-pub-01.id

iam_instance_profile = aws_iam_instance_profile.oliveyoung-bastion-ssm-profile.name

vpc_security_group_ids = [

aws_security_group.oliveyoung-bastion-sg.id,

]

tags = {

Name = "oliveyoung-bastion"

}

}

# Check bastion public ip

output "bastion-public-ip" {

value = aws_instance.oliveyoung-bastion.public_ip

}

위와 같이 코드를 만들고 bastion server를 만들어 줍니다.

그러면 아래와 같이 bastion server를 ssm으로 접속이 가능합니다.

5. SSM 설정

이 설정은 프라이빗 서브넷에 있는 서버에 ssm으로 접속하기 위한 방법이다.

이때, ssm, ssmmessages, ec2messages 서비스로 엔드포인트를 총 3개 만들어야합니다.

resource "aws_vpc_endpoint" "ssm-endpoint-ssm" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

service_name = "com.amazonaws.${var.aws_region}.ssm"

vpc_endpoint_type = "Interface"

subnet_ids = [aws_subnet.oliveyoung-2a-pri-eks-01.id, aws_subnet.oliveyoung-2c-pri-eks-01.id]

security_group_ids = [aws_security_group.oliveyoung-ssm-endpoint-sg.id]

private_dns_enabled = true

}

resource "aws_vpc_endpoint" "ssm-endpoint-ssmmessages" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

service_name = "com.amazonaws.${var.aws_region}.ssmmessages"

vpc_endpoint_type = "Interface"

subnet_ids = [aws_subnet.oliveyoung-2a-pri-eks-01.id, aws_subnet.oliveyoung-2c-pri-eks-01.id]

security_group_ids = [aws_security_group.oliveyoung-ssm-endpoint-sg.id]

private_dns_enabled = true

}

resource "aws_vpc_endpoint" "ssm-endpoint-ec2messages" {

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

service_name = "com.amazonaws.${var.aws_region}.ec2messages"

vpc_endpoint_type = "Interface"

subnet_ids = [aws_subnet.oliveyoung-2a-pri-eks-01.id, aws_subnet.oliveyoung-2c-pri-eks-01.id]

security_group_ids = [aws_security_group.oliveyoung-ssm-endpoint-sg.id]

private_dns_enabled = true

}

6. EKS 설정

04_eks.tf를 생성하여 아래 코드를 추가합니다.

#########################################################################################################

## Create eks cluster

#########################################################################################################

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 20.0"

cluster_name = var.cluster-name

cluster_version = var.cluster-version

cluster_endpoint_public_access = true

cluster_endpoint_private_access = true

cluster_addons = {

coredns = {

most_recent = true

}

kube-proxy = {

most_recent = true

}

vpc-cni = {

cluster_name = var.cluster-name

most_recent = true

}

aws-ebs-csi-driver = {

most_recent = true

}

}

vpc_id = aws_vpc.oliveyoung-vpc-ap-northeast-2.id

subnet_ids = [aws_subnet.oliveyoung-2a-pri-eks-01.id, aws_subnet.oliveyoung-2c-pri-eks-01.id]

# EKS Managed Node Group

eks_managed_node_group_defaults = {

instance_types = ["t3.medium"]

}

eks_managed_node_groups = {

eks-node = {

min_size = 1

max_size = 6

desired_size = 4

instance_types = ["t3.medium"]

}

}

}

module "vpc_cni_irsa" {

source = "terraform-aws-modules/iam/aws//modules/iam-role-for-service-accounts-eks"

version = "~> 4.12"

role_name_prefix = "VPC-CNI-IRSA"

attach_vpc_cni_policy = true

vpc_cni_enable_ipv4 = true

oidc_providers = {

main = {

provider_arn = module.eks.oidc_provider_arn

namespace_service_accounts = ["kube-system:aws-node"]

}

common = {

provider_arn = module.eks.oidc_provider_arn

namespace_service_accounts = ["kube-system:aws-node"]

}

}

}

############################################################################################

## 로드밸런서 콘트롤러 설정

## EKS 에서 Ingress 를 사용하기 위해서는 반듯이 로드밸런서 콘트롤러를 설정 해야함.

## 참고 URL : <https://docs.aws.amazon.com/ko_kr/eks/latest/userguide/aws-load-balancer-controller.html>

############################################################################################

######################################################################################################################

# 로컬변수

# 쿠버네티스 추가 될때마다 lb_controller_iam_role_name 을 추가해야함.

######################################################################################################################

locals {

# data-eks 를 위한 role name

oliveyoung_eks_lb_controller_iam_role_name = "oliveyoung-eks-aws-lb-controller-role"

k8s_aws_lb_service_account_namespace = "kube-system"

lb_controller_service_account_name = "aws-load-balancer-controller"

}

######################################################################################################################

# EKS 클러스터 인증 데이터 소스 추가

######################################################################################################################

data "aws_eks_cluster_auth" "oliveyoung-eks" {

name = var.cluster-name

}

######################################################################################################################

# Load Balancer Controller ROLE 설정

######################################################################################################################

module "oliveyoung_eks_lb_controller_role" {

source = "terraform-aws-modules/iam/aws//modules/iam-assumable-role-with-oidc"

version = "v5.1.0"

create_role = true

role_name = local.oliveyoung_eks_lb_controller_iam_role_name

role_path = "/"

role_description = "Used by AWS Load Balancer Controller for EKS"

role_permissions_boundary_arn = ""

provider_url = replace(module.eks.cluster_oidc_issuer_url, "https://", "")

oidc_fully_qualified_subjects = [

"system:serviceaccount:${local.k8s_aws_lb_service_account_namespace}:${local.lb_controller_service_account_name}"

]

oidc_fully_qualified_audiences = [

"sts.amazonaws.com"

]

}

*‘terraform plan’을 하고 아래 에러가 뜨면 ‘terraform init’을 해줘야 합니다.

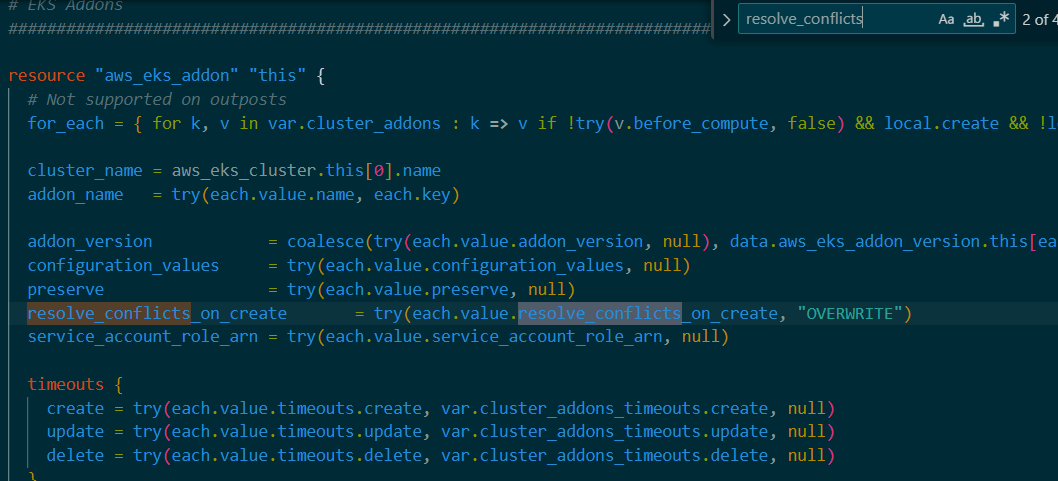

‘terraform plan’을 하고 아래와 같은 경고가 나타나면

‘resolve_conflicts’를 resolve_conflicts_on_create 나resolve_conflicts_on_update 로 바꾸어 줘야 합니다.

저는 resolve_conflicts_on_create 로 바꾸어 주었습니다.(총 4개)

그런 다음, 노드가 생긴 것을 다음과 같이 확인할 수 있습니다.

그런데 node에 ssm으로 접속이 안되어서 노드의 IAM 역할에 ‘AmazonSSMManagedInstanceCore’을 추가해주었습니다.

아래에 절차를 해봅시다.

‘.terraform/modules/eks/node_group.tf에 들어가서 IAM role 지정한 곳을 찾습니다.

그런 다음 아래 코드와 같이 iam_role_additional_policies를 수정해줍니다.

iam_role_additional_policies = merge(

lookup(

each.value,

"iam_role_additional_policies",

lookup(var.eks_managed_node_group_defaults, "iam_role_additional_policies", {})

),

{

"AmazonSSMManagedInstanceCore" = "arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore"

}

)

그리고 iam_role_name에서 아래와 같이 역할 이름도 수정이 가능합니다.

그럼 이제 ssm으로 eks node에 아래와 같이 접속이 가능합니다.